Africa is a vast, varied and vexing place but there is no essential thing that is Africa. Dipo Faloyn, the Nigerian writer, sums it up in his very personal book ‘Africa Is Not a Country’. Forget the images you see on TV of safaris, wars, child soldiers and famine, as most of that is through the eyes of visitors who stay in comfortable hotels, working for organisations that have a saviour agenda. There are countries north of the Sahara that are very different from those immediately below. Egypt, Nigeria and South Africa are radically different places at the top, middle and bottom of Africa with substantial economies. There are many Africas, there is no ‘Africa’.

I’m just back from Senegal, the host country this year for E-learning Africa and have been to this event many times. It remains the defining Learning Technology event in that continent. The brainchild of Rebecca Stromeyer, it goes way beyond the normal conference format, lively, intense and friendly networking along with a session for Education Ministers from all over Africa and a ‘challenging’ debate, which I’ve participated in several times.

You can’t shy away from the chaos when travelling in Senegal. One very large group turned up to a Hotel that did not exist. They had been scammed. We turned up at 2am to our hotel and found that, despite it having been booked and paid for, they had sold on our room and we had to find another. Walking around Dakar at 3.30 am looking for a taxi is no fun, especially when every taxi driver we used required Kissinger-level negotiations on price. On one occasion, having agreed a generous fare, one driver turned round and claimed that the fare was per person! My son returned to his room one night and found someone else in his Hotel bed. The lack of organisational cohesion and training is tangible. On the whole the people are wonderful and chilled but I do0n;t want to varnish over this stuff as there is no way it can develop successful tourism with this level of chaos and hassle.

I have travelled a lot and never seen so many police, even in and around the conference.

Also never stepped through so many scanners. Every building has one, yet despite me setting of the red lights and bleeps they just wave you through, many are not looking at the scanner screen at all, bored and on their smartphones. They are experiencing political unrest as we speak, with street protests and a Ministerial building being burned after the opposition leader Ousmane Sonko was arrested on drummed-up sexual assault charges. We were stopped at police roadblocks twice and on the way to the airport we saw people being made to lie down on the road in a massive traffic holdup.

There is lots of equipment here but little training, so nothing really works and when it breaks it is catastrophic. Let me give you an example. The flight from Senegal was late which meant we couldn’t make our connection. The TAP (Air Portugal ) office at the airport had someone who quite simply couldn’t be bothered helping. She sat there arrogantly waving us away with her hand then asking that we pay for another flight! We confirmed in Lisbon that she could have arranged our second leg. The problem is deep rooted nepotism. She has this job but can’t operate the computer, so just sits there taking the salary. I can’t tell you how many times we had our credit cards declined because the signal or card machine didn’t work.

You have to wrestle with these contradictions. The fundamental fact is that this is a rich continent full of poor people. It is easy to blame colonialism and see everyone as a victim, but there is obvious money around. I saw a blue Bentley outside of the restaurant we were eating in with an old man buying Moet for six women at the table and huge mansions lining the coast. There is wealth here, with huge sums at the peak of the pyramid, a tiny middle class and a vast number who remain poor. The cash is being spent on vanity projects and a vast army and police force who keep the oligarchy in power.

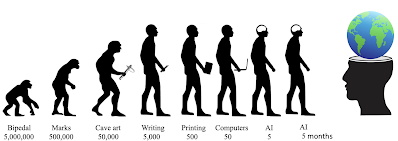

Ok so that is the economic and political backdrop. As I was here to deliver some sessions on AI, how do you position the technology thing?

The white saviour complex sees ‘others’ as the only hope for these hapless Africans. Yet it is the white saviour complex that caricatures them as unable to help themselves, people to be saved from themselves. As I walked through the real market in Dakar, not the ones selling beads back to Western tourists ( a neat and startling reversal), I saw a highly entrepreneurial society. A lorry load of old spare parts were being dumped out onto the road, while people bid for the spares. Recycling is a necessity here. This is not a functionally helpless society, it is a society held back by its own leaders, who strip it bare. The tech kids I meet here are highly entrepreneurial and will move things forward. It is the anti-business, keep them on a NGO ride that will stop Africa. NGOs are band-aids, not the answer. I feel as though, since the 1980s it is the NGOs who seem to be full of colonial rhetoric, the new institutional colonialism. The Geldof inspired ‘Do they know it’s Christmas?’ is perhaps the most condescending song title in history. Africa has more Christians than any other continent, who maybe knew it was Christmas, it also has 450 million Muslims – wonder how they felt? The hideous, theatrical spectacle of celebrity activists grew out of that time and the simplistic rhetoric of binaries continues, northern hemisphere-southern hemisphere, rich-poor, white-black, evil-good.

In any case, we’re not here as tourists, we’re here to get something done. Education and health remain problematic in all poor countries, especially countries like Senegal, where the politicians are clearly rapacious and corrupt. I was the only person in the hall who refused to stand for the Prime Minister after we had waited over and hour and a half for him to grace us with his banal presence. Being here as a white saviour was not my intention. I have been here before in the African Congress building in Addis, Ethiopia, debating that Africa needs vocational skills, not abstract University degrees – we won. I have always supported the spread of free tools and learning on the internet to get to the poor. This has happened with finance, it can happen with education. The infrastructure problem is being solved, via satellites and ever-cheaper smartphones. Sure the digital divide exists but constantly painting a picture of a glass half empty while it is being filled is another example of white saviour behaviour.

Michael, my partner in crime in the debate, from Kenya, does something about the problems through his ‘4gotten Bottomillions’ (4BM), Kenya's largest and most trusted WhatsApp Platform, connects the unqualified and poor to real work and jobs. He is critical of white saviour attacks on US tech companies who pay for data work in Kenya. This, for him, is rich white virtue signalling denying poor Africans a living. These companies pay above the minimum wage and the work is seen as lucrative. Michael wants to empower Africans, not disempower them through pity. His message to everyone is to suck it up and get on with things, not to wait on grants and NGO benefactors. Until that happens, Africa will remain in psychological chains to others.

My first event was a three hour workshop on AI, as an astounding technology that really can deliver in education and health. It is already being used by hundreds of millions worldwide to increase productivity and deliver smart advice, support and learning free of charge. This is exactly what Africa needs. Let the young use it, don’t cut Africa out of the progress as that will turn an entire continent into North Korea and Iran, the two countries that banned it (even Italy saw the error of its ways).

I did another session on online language learning where the presenters were asking young Africans to learn and get tested on, either English or French. French is the, they claimed, the language of culture. Yes – but whose culture? The French. My position was that Generative AI already delivers in over 100 languages, shortly moving towards 1000, many of them African. We have the chance of having a universal teacher on any subject, with strong tutoring and teaching skills available to anyone, anywhere with an internet connection, for free, in any language.

The solution is to get this fantastic, free and powerful educational software to everywhere, not just Africa. Stop using specious arguments about northern hemisphere bias and footnote ethical concerns to stop action. Let Africans decide what's good for them and allow Africa to use AI in the local context. Getting this stuff into the hands of the young and poor is the answer.

My final event was in the Big Debate, on whether ‘AI will do more harm than good in Africa. Michael and I lost. Why? The audience was largely either the donors or receivers of white saviour aid. They do this for a living and anything that threatens to bypass them and go straight to the working poor is seen as a threat. They need to be in control, seeing themselves as providing educational largesse. You get this a lot at Learning Technology conferences, people who fundamentally don’t like technology.

I'm well aware that the sermon has always been the favoured propaganda method of the saviour and see that I too am part of the problem at such events. But the lack of honesty about the saviour-complex has become a real problem. My hope os that this powerful technology bypasses the oligarchies, saviours and gets into the hands of the people that matter.

The opposition played the usual cards. First the argument from authority, which I always find disturbing, ‘I’m a Professor, therefore I’m right, you’re wrong’. My friend Mark, on the opposition side gave an eloquent speech brutally attacking the culture of screens and smartphones. I was sitting behind the lectern and noticed that he had read the entire speech, every last word, verbatim, from, guess what… a smartphone! It’s OK for rich white people to use a full stack of tech but not Africa. I rest my case.